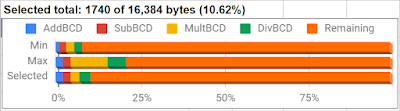

The last few weeks I have gone down a little bit of a rabbit hole writing BCD math routines for my Tiny Calculator project. The goal has been to compare different calculation strategies to find which ones are fast but still small enough to fit in the 16k of flash the MSP430 I am using has. Like the addition routines I wrote about in my last post, all of these routines were a lot smaller than I expected. There were also some other surprises about what worked best.

The last few weeks I have gone down a little bit of a rabbit hole writing BCD math routines for my Tiny Calculator project. The goal has been to compare different calculation strategies to find which ones are fast but still small enough to fit in the 16k of flash the MSP430 I am using has. Like the addition routines I wrote about in my last post, all of these routines were a lot smaller than I expected. There were also some other surprises about what worked best.Subtraction

To start with, I based this routine on the setup code for the addition routine, which saves some space. Originally my plan was to make everything modular so that I could substitute pieces of code in depending on what size and speed trade off I wanted, which is why I have separate body and ending options in the table for my addition routine. However, to save space and build one routine off the parts of another, it makes more sense to decide which version to use at the beginning. The only big difference between the two subtraction routines I tried was rolling and unrolling the main loop, with the unrolled version being about 75% faster. It's also about 25% larger but that is only around 50 bytes.

Multiplication

In addition to the straightforward method of repeated additions, I tried the quarter squares and Russian peasant algorithms I learned about doing 6502 BCD multiplication. It was a surprise that using quarter squares was over 10% slower on the MSP430 than the Russian peasant algorithm, whereas it was over 4 times faster on the 6502! Part of the difference may be the speed up I get on the MSP430 by converting each digit of one argument to binary (so it can be halved) only once and saving it, rather than converting it every time like on the 6502. Another major difference is how much slower accessing tables on the MSP430 is compared to register operations. It's interesting how the timing of the architecture can decide which algorithm works the fastest. It's also great that the Russian peasant algorithm doesn't need any tables and is therefore much smaller.

Division

For division I tried several different strategies and settled on a relatively fast version that uses subtract and shift. The first version took around 183k cycles for my test calculation. The second version got down to around 85k cycles after I realized that carries only need to propagate one place, so there is no need to propagate potential carries through the whole number every time through the loop. The next version got down to 25k cycles by creating an extra copy of the numerator shifted by one nibble. Without the shifted version, dividing 98 by 1 would take 98 subtractions. With the shifted numerator, 10 is subtracted from 98 only 9 times, then that 10 is shifted down to 1 and subtracted 8 times from the left over 8. In this case, the calculation is around five times faster. Another version I tried calculated the minimum number of subtractions possible without underflowing and then multiplied the divisor by that number and subtracted it from the total. My hope was that doing the multiplication in registers with the Russian peasant algorithm and subtracting from the memory only once would speed things up since the memory is so much slower to access. Unfortunately, I could only get it down to 33k cycles compared to the 25k cycles of the previous algorithm. This method is a little tricky at first. If the first nibble of the numerator is 9 and the first nibble of the denominator is 1, you might be tempted to think that the denominator must divide into the numerator at least 9 times, but the minimum is actually only 4. This is the case, for example, when the numerator starts with 90 and the denominator with 19. The other method I tried was the Goldschmidt algorithm, which requires two multiplications per iteration. Each multiplication takes around 20k cycles and I would apparently need around 16 iterations to get full precision. When it became clear how much slower this would be, I gave up on it in favor of subtract and shift. There are a few more tweaks I could make to the routines, but I consider the result I have now sufficient, since it is extremely small and fast and this is a relatively simple calculator. This project is taking longer than I thought it would, and I would like to finish and move on to other projects.

IAR Workbench continues to be a pain in the neck. It routinely loses breakpoints, which has lead me to go looking in my code more than once for whatever bug would prevent it from breaking execution where it should. Their system seems to work if you have source code that you are not making any changes to. Another really annoying thing is broken tab support. None of my comments line up since tab doesn't do what it's supposed to. The editor has tooltips that show the numerical address of labels in your code, but since the MSP430 flash starts at 0xC000, the editor displays all flash addresses as negative numbers. Of course, seeing the decimal equivalent of label addresses is not a crucial feature, but it does illustrate how poorly designed the product is. The cycle count per instruction also does not update unless you scroll down far enough to display it. Otherwise, it erroneously shows that the last instruction executed took as many cycles as the entire program up to that point.

It has been really interesting to work with the MSP430 at the assembly level. Having lots of registers is very convenient compared to what I am used to with the 6502. It is a little surprising that I managed to run out of them for even my simple math routines. So far my strategy has been to push registers I'm not currently using to free them up when I need extras. Allocating local variables on the stack for my functions would probably be more efficient. It seems to me that I tend to get lazy with those decisions since setting that up requires extra overhead in assembly you wouldn't have in C. Mainly I am thinking about keeping local variable addresses relative to the stack pointer straight when I use the stack for other things. The smart solution is probably to push the space I need on the stack then make a copy of the stack pointer into a register and use that as the base for local variable addresses, so the stack pointer is free to do other things. From there, I would still need to determine which variables could be reused at various points in the function to save memory. It also seems like I am more hesitant to refactor code in assembly than I would be in C, which became an issue when I based math routines on previous versions by adding extra code. All of this makes me appreciate what a C compiler does behind the scenes, including using registers efficiently.

;Start with 34

MOV #0x34, R4

;Calculation

ADD #0x66,R4 ;2 cycles

INV R4 ;1 cycle

;Table lookup

MOV table(R4),R5 ;3 cycles

The INV instruction is actually a pseudo-op that XORs the register with 0xFFFF. It is only 1 cycle because 0xFFFF is one of the values that the constant generator produces, so it doesn't have to waste cycles loading the constant from memory. Another thing I noticed is that MOV.B #0xFF,R4 is only one cycle and one word since it uses the constant generator, while MOV #0xFF,R4 stores the word 0x00FF in memory and therefore takes two cycles and two words. The interesting thing is that the MOV.B version clears the high byte of the register, so both instructions have exactly the same result. Clearing the high byte of the register every time a byte operation takes place is convenient in some cases but not in others. There doesn't seem to be a way, for example, to OR one byte directly from memory with a word stored in a register without loading the byte into a register first. It is also curious that the @Rn indirect addressing mode cannot be used as a destination. Instead, you would have to provide an offset, even if it is 0, like this: 0(Rn). That offset is stored in memory and cannot be generated by the constant generator. This seems like a weird design choice to me since you might be able to save program space by supporting @Rn for destinations. Maybe the logic is that @Rn without auto-increment would need an additional word-long instruction to advance the destination pointer, so providing the offset explicitly every time doesn't leave you any worse off space-wise. In any case, these few shortcomings don't bother me, since the chip is otherwise fun to work with and was apparently designed to be low-power, rather than high-performance.

Next, I plan to work on CORDIC routines for the trig and log functions. That will probably be the biggest chunk of program memory I need on this project. Considering how small the four basic functions turned out to be, I hope that the CORDIC routines will be fairly small as well. After that, I will need some font data for the LCD and more code for the interface. There is a possibility that there will be a few kilobytes of free memory at the end, in which case I might be able to add simple key stroke programming.

No comments:

Post a Comment